2025: A Look Back

So another year’s almost over. And you know what that means: time for a look back - from the perspective of someone who spends an unhealthy amount of time in DevTools and terminal windows.

And yes… obviously this will also be about AI. Not just about AI, but a lot of AI, because it influenced basically everything in 2025, whether we like it or not.

And believe me: I’m not a fan of having AI everywhere. But it is what it is. So let’s take a step back and talk about what actually happened.

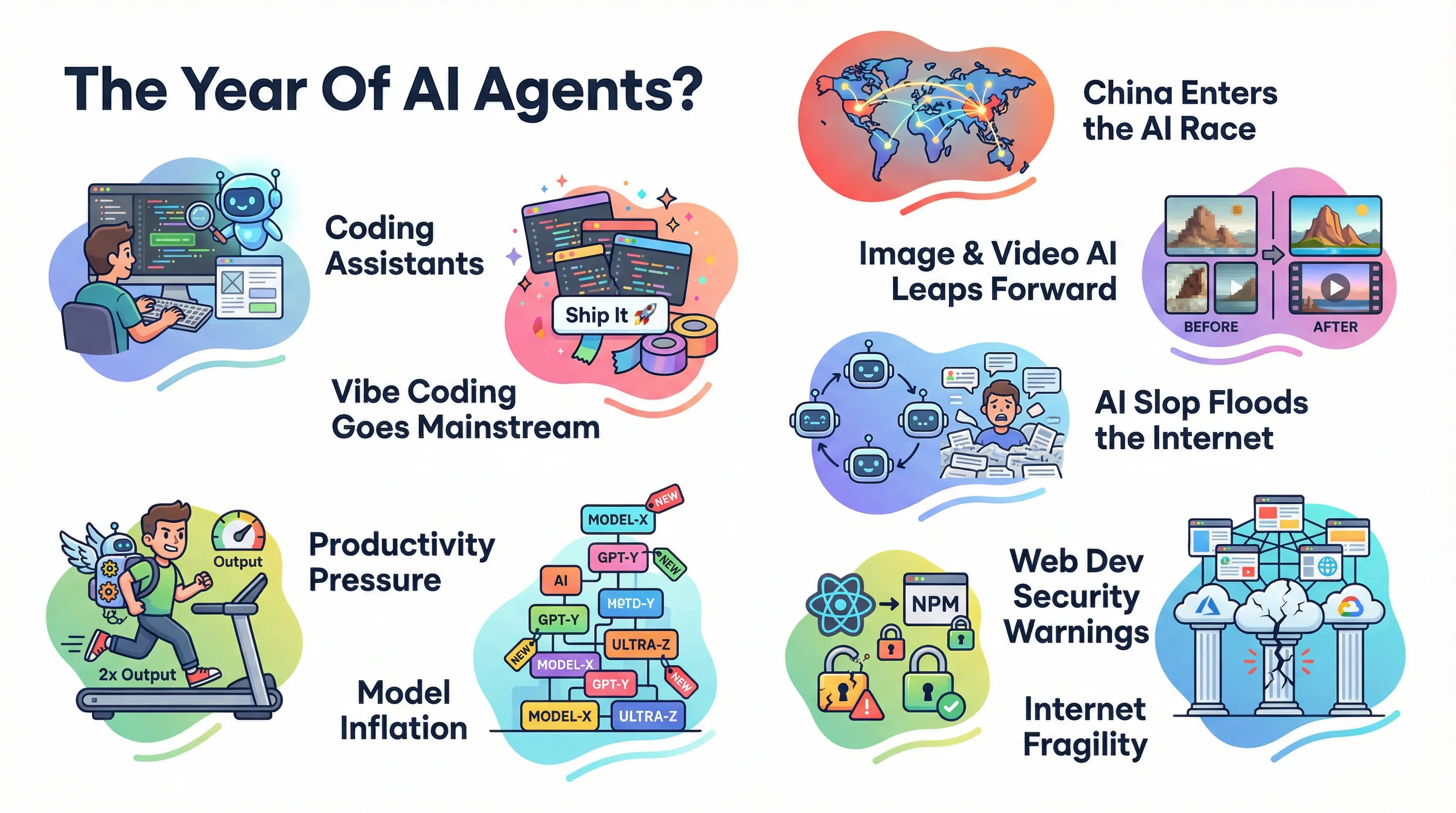

2025 Was Supposed To Be “The Year of AI Agents”

You probably remember the headline. “2025 is the year of AI agents.” It was everywhere at the start of the year. And honestly… it quickly turned into one of those terms that means whatever the speaker needs it to mean.

Because for a lot of people, an “AI agent” basically became:

“Anything that uses AI for anything.”

You wrote a script that uses an LLM to summarize a PDF? Congrats, you built an AI agent.

So yes: we did see real progress in what I’d call agentic workflows. But we should be honest about what that usually means in practice.

What “agentic workflows” really are (in 2025 terms)

Depending on your definition, it can mean anything… but the useful definition is this: AI doesn’t just output text - it makes decisions, and those decisions result in actions, often via tools (APIs, file edits, browser automation, etc.).

And yes, it’s still token generation. But tokens can describe tool calls. And tool calls can change real systems. That’s the core of “agents”.

Coding Assistants Are The One Category That Actually Stuck

The most obvious “agents” we all interacted with this year weren’t some fancy corporate workflow automation platform. They were coding assistants.

This didn’t start in 2025, obviously. But 2025 was the year where the ecosystem got… loud. We saw a ton of development in tools like Cursor, Claude Code, and the broader “IDE becomes an agent” trend.

And to be clear: these tools can be genuinely useful. They can generate boilerplate fast, scaffold features from instructions, help you explore APIs you don’t know yet, speed up refactors, and reduce the “blank page” problem.

But they also created (or amplified) another big theme:

“Vibe Coding” Went Mainstream

“Vibe coding” (as popularized this year) is basically: don’t read the code too much, go with the vibes, if it works ship it, and if it doesn’t tell the AI to “fix it”.

That can work for prototypes, throwaway scripts, one-time utilities, or exploring an idea. I’ve tried my fair share of this. Results were… mixed. And for anything serious, I’m still convinced:

Expertise + AI > Vibe Coding

If you actually know what you’re doing - and you use AI as an assistant - you get so much better outcomes than if you outsource the whole brain.

Vibe coding platforms (Lovable, v0, and friends) are here, and they’ll probably stay. But “it’s possible” isn’t the same as “it’s a good idea”. Because the moment you stop understanding what you’re shipping, you stop being an engineer and start being a prompt operator with production access. That’s not a great job title.

Agents In ChatGPT (And “Deep Research”) Are Cool… But Not Universal

OpenAI (and others) pushed hard on “AI that does stuff for you” - clicking around websites, filling forms, performing research tasks, summarizing sources.

This is useful. But if I’m honest, it doesn’t feel like a feature everyone uses constantly. It’s more like: amazing in specific moments, but not something that replaced entire workflows for most people.

And the same goes for “deep research” modes in general. They can be great starting points. But using the output as-is is still usually a mistake. The best pattern is still: use AI to accelerate the first draft, validate it with your own expertise, do additional research where needed, then iterate.

I personally like using deep research tools as an assistant, not a brain transplant.

The Big Corporate Promise Didn’t Fully Land

Here’s the thing: if “agents everywhere” actually happened, you’d feel it. And I don’t think most companies do.

Instead, what we saw was a lot of this: companies want to use AI, executives feel like they have to, so they scramble to find use cases… and it’s hard.

Coding assistance is one of the few clear wins. But beyond that? Many “agent” stories still feel like demos looking for a business model.

And yes - there were also stories this year about major AI products not delivering on hype, or not being adopted as aggressively as expected. That doesn’t mean AI isn’t real. It means: adoption is messy.

The Most Toxic Trend: “Do Double The Work Or Else”

Let’s talk about something uglier. There are absolutely teams right now where the vibe is:

“You either output 2x-3x with AI… or we reduce headcount.”

That’s a horrible situation. Because AI can make you more productive. But 2x-3x as a sustained expectation across real-world systems? I’m not convinced.

AI is a great assistant. It is not a great replacement. And treating it like a replacement leads to exactly what you’d expect: worse quality, more outages, more security problems, burned out teams, and fragile systems held together by vibes.

Model Inflation: It Felt Like A New Release Every Week

2025 also had a very specific kind of chaos: Model inflation. It genuinely felt like every week there was a new flagship model, a new “Pro” variant, a new benchmark victory lap, or a new “this changes everything” thread.

As a web developer, it reminded me of the framework wars era - except worse. Because at least in the framework wars, you could pick one and build an app. With models, you’re constantly asking: Which one is actually good in practice? Which one is good for my use case? Which one is good this week, not just on paper?

And we saw this pattern multiple times: model looks amazing on benchmarks, public opinion is “not excited”, and it “falls flat” in day-to-day use. Benchmarks don’t tell the full story. They never did.

The China Factor Got Impossible To Ignore

The year also started with a shock: new models coming out of China that people didn’t see coming, and that appeared to compete aggressively - sometimes with a narrative of being trained more cheaply than expected. Fast forward to the end of 2025, and it’s pretty clear: Chinese models matter (text, image, video), they’re part of the global landscape now, and they’re not going away.

Whatever your take on geopolitics or supply chains, the competitive dynamic is real. 2026 is not going to be a “US-only” AI story.

Image + Video Generation Improved… A Lot

Text models aren’t everything. This year, image and video generation made leaps that are honestly hard to explain to someone who hasn’t been watching the space closely. Compared to 2024: output quality is higher, character consistency is better, motion is better, and even audio/storytelling tooling is improving.

This allowed me to build side-businesses like AI Business Headshots and AI-powered (info)graphics . But let’s be honest - there’s a huge downside and problem:

AI Slop: The Internet Is Becoming Bots Reading Bots

Significant portions of traffic and content online are now AI-generated content, scraped by bots, summarized by bots, reposted by bots, and consumed by other bots.

Two or three years ago, AI-generated content was easy to spot because it was… bad. Now it’s everywhere. And it’s accelerating.

AI can produce incredibly useful things - icons, images, summaries, drafts, tooling. But the slop problem is real: timelines full of garbage, SEO pages that exist only to capture queries, and “content” that nobody actually wanted to create or read.

Web Development Wasn’t Only AI: Frameworks, Security, And Fragility

Even if you try to avoid AI discourse, web development still had a busy year. We got new releases across the usual suspects - React / Next.js (19.2, 16), Angular (19 + 20), and lots of other frameworks and libraries of course.

But 2025 also had a very strong security theme.

React + Server Components had a rough moment

There were vulnerabilities reported related to React and React Server Components. That alone is noteworthy - but what makes it scarier is the larger trend: As React becomes the default for more and more apps, it becomes a more attractive target.

NPM supply chain attacks continued (and they’re not slowing down)

We also saw multiple NPM-related attacks: packages compromised, malicious code spreading across dependencies, and real risk of credentials being stolen or machines being targeted.

Even experienced developers install packages and trust the ecosystem. And that ecosystem is… extremely exploitable.

Now add live coding into the mix - people shipping dependency soups they don’t understand - and you get a future that’s going to be very fun for attackers. And that’s before we even talk about AI being used for writing malicious code faster, scaling social engineering, generating realistic voices, and producing convincing phishing.

The Internet Felt Fragile: AWS + Cloudflare Outages

We also had two big outages in the fall. AWS took significant chunks of the internet down for hours. Cloudflare also had a major incident on a smaller scale.

Not the first outages ever, obviously. But having major incidents close together is a reminder: the internet is “decentralized” in theory, but in practice it’s very dependent on a few giant providers.

If one big region has a bad day, an absurd number of sites have a bad day. That’s… not a new insight. But it felt very real this year.

The “Winning Stack” Problem: React + TS + Next + Tailwind + ChatGPT

Here’s a take that might annoy some people: It looks like AI has a favorite stack. And that’s a problem.

Not because those tools are bad (they’re great). But because innovation benefits from competition and diversity. When the world converges too hard on one default stack, you get monoculture risk (security + ecosystem fragility), less exploration, less weirdness, and less innovation at the edges.

And AI can unintentionally amplify that, because it tends to regurgitate what it sees most. If the internet trains the model on “React + Next + Tailwind everywhere”, then surprise: the model suggests React + Next + Tailwind everywhere.

Small (But Meaningful) Trends I Actually Loved

Despite all the doom, there were plenty of things this year that made me genuinely excited to build. A few highlights:

- TanStack continuing to gain momentum (and deservedly so)

- the broader “back to monoliths / back to VPS” vibe (at least outside giant enterprises)

- TypeScript tooling getting faster and more serious

- auth getting less painful thanks to libraries like BetterAuth

- runtimes and frameworks like Bun and Hono being genuinely fun

- PostgreSQL continuing to be the quietly unstoppable foundation (and extensions like pgvector enabling real RAG apps without building a spaceship)

And browsers shipped great stuff too: Popover API, more modern CSS, and more web platform primitives that reduce the need for absurd hackery.

The frustrating part is that AI often lags behind on these newer platform capabilities. If AI is trained on yesterday’s patterns, it’ll happily generate yesterday’s solutions. So the best developers in the AI era still need to do what they always did: keep learning, keep reading docs, and keep understanding the platform.

Where I Landed By The End Of 2025

Web development is, in many ways, in great shape: mature frameworks, better browser APIs, stronger tooling, and more ways than ever to build things quickly.

And yes, AI can be a useful assistant. I love outsourcing certain tasks to it. I love speeding up boring parts. I love that it can help you explore unfamiliar APIs.

But I really hope we don’t get consumed by AI hype, AI slop, executives forcing AI into everything, and the idea that AI replaces developers. Because it doesn’t. Not in any responsible sense.

The best path is still: build real expertise, use AI as leverage, keep your standards high, and don’t ship vibes into production.

It might be the best time ever to be a developer. But it’s also one of the most difficult times. And that’s the 2025 vibe, in a nutshell.

If I missed something you found important this year, let me know - I try to be comprehensive, but the year was… a lot.