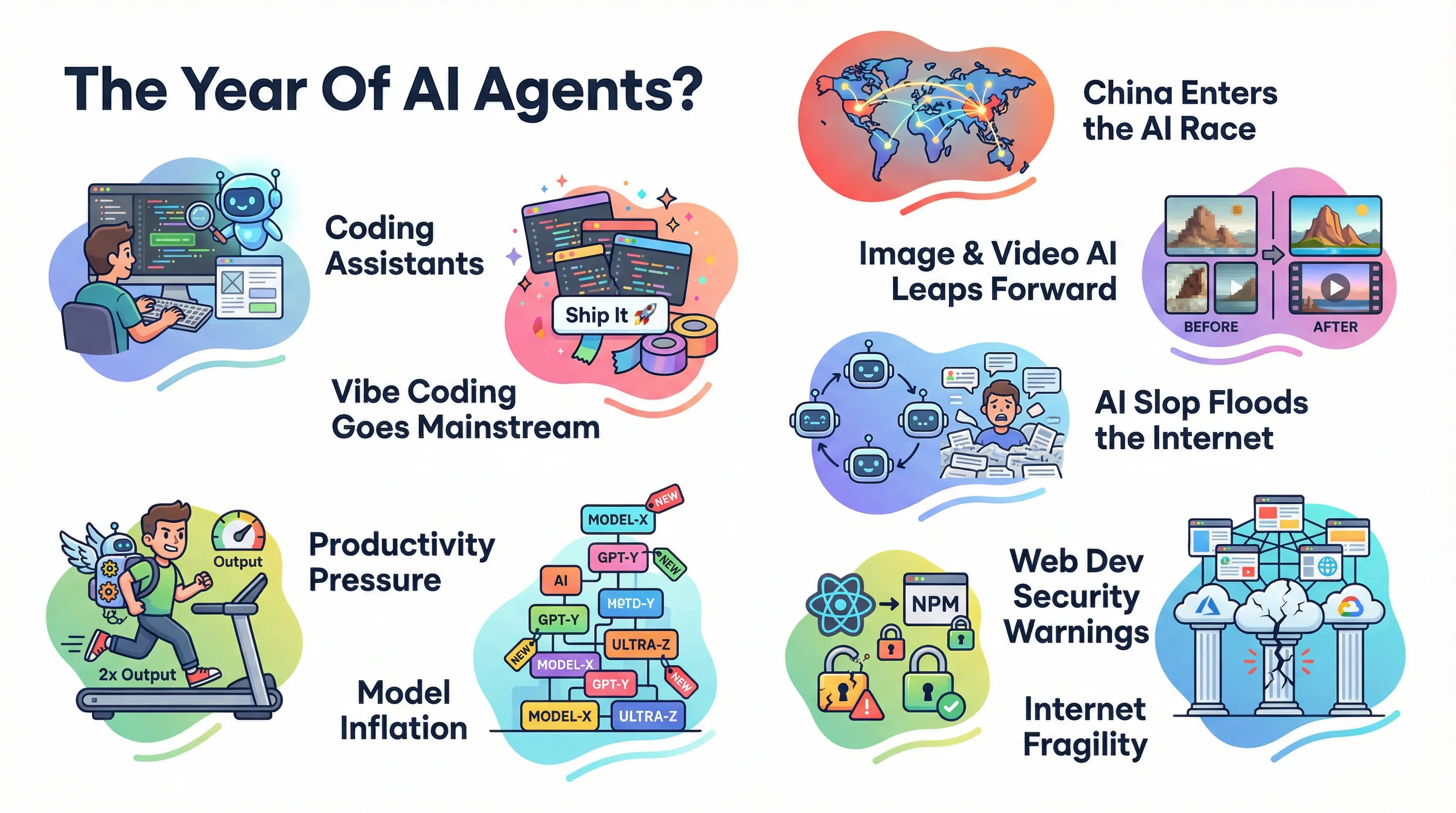

2025: A Look Back

A look back at 2025 from the perspective of a web developer - who's both excited about AI and not always thrilled about it

I write articles and share my thoughts about all kinds of things - mostly related to software development, devops and more.

A look back at 2025 from the perspective of a web developer - who's both excited about AI and not always thrilled about it

After gong on a hiring spree for 100s of millions this summer, Meta's 600 people layoff seems surprising. But it's part of a bigger plan and power struggle as it seems.

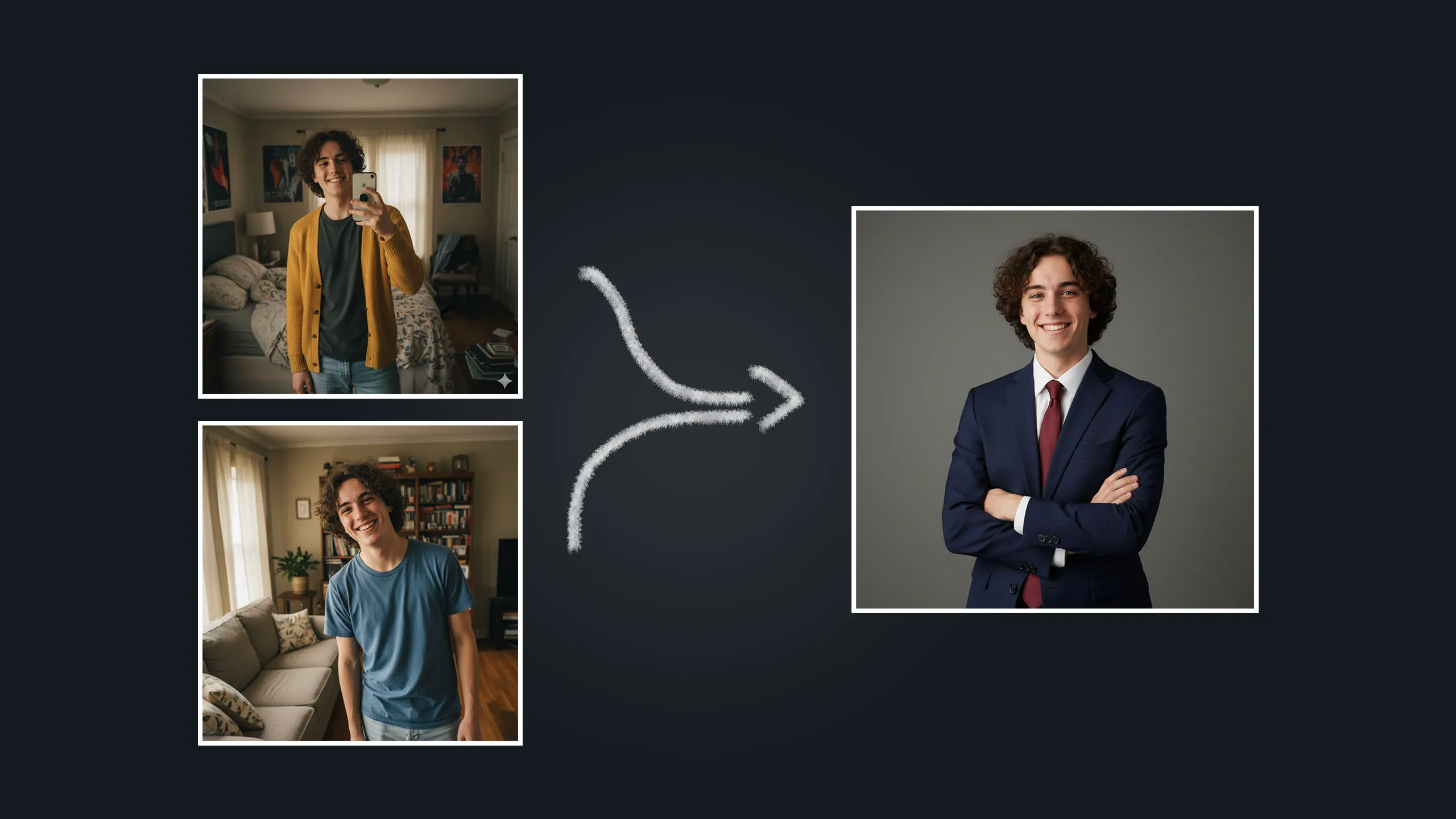

Naturally, as a developer in need of a professinal business headshot, I built an entire AI business headshots service. You can try it, too!

Gemma 3n is a new open large language model by Google that combines two models into one, offers multimodal capabilities, and runs locally with reduced memory requirements. Here's why it may be amazing.

You can get more done by leveraging LLMs and generative AI. But it's probably not so much about an "AI Agent Army" as it is about using AI for specific tasks. Here are some examples of how I use AI in my daily work.

Large Language Models (LLMs) are great at generating code, but they often default to a narrow set of technologies. This could stifle innovation and lead to outdated practices.

Running open LLMs locally or self-hosting them is great, but it can be a hassle (AND may require significant resources). Services like Amazon Bedrock allow you to use (and pay for) open models on-demand, without the hassle of self-hosting.

Many large language models (LLMs) can be run on your own computer. And whilst you don't need a supercomputer, having a decent GPU and enough VRAM will help a lot. Here's why.

AI coding assistants are powerful tools, and, to some extent, they can replace developers. But they are not a replacement for coding skills. In fact, they make coding skills even more important. The danger just is that we might forget that.

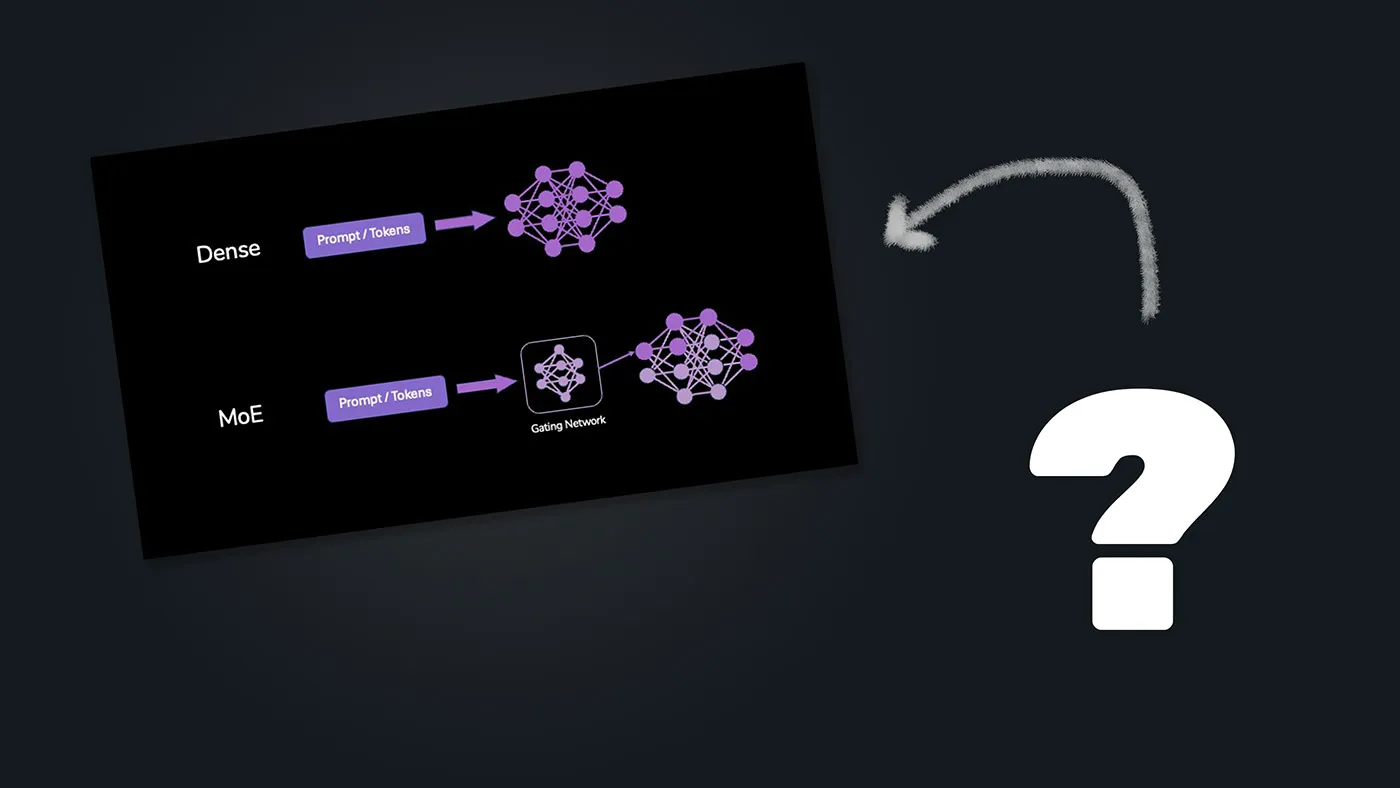

Mixture of Experts (MoE) LLMs promise faster inference than traditional Dense models. But the model names can be confusing. And a surprise might await when trying to run them locally.

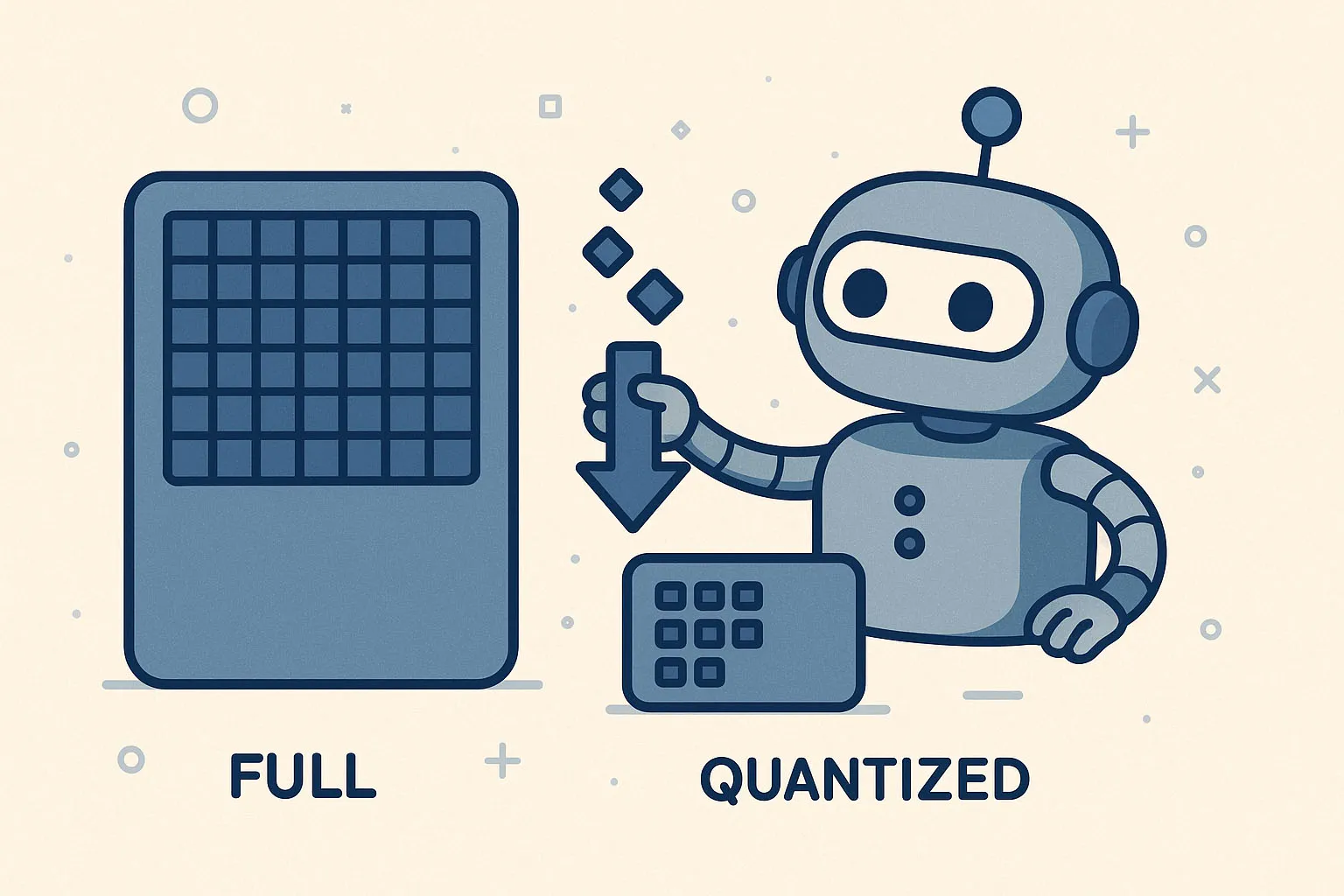

Quantization is a technique that essentially "compresses" the weights of a LLM, hence allowing it to run on way less (V)RAM than it would otherwise need. This is a game changer for running open LLMs like Gemma, Qwen or Llama locally.

You don't need ChatGPT or Google Gemini for everything. Running open LLMs locally is a great way to save costs and have more control over your data. It's super easy, you don't need a supercomputer, and those models are way better than you may think.

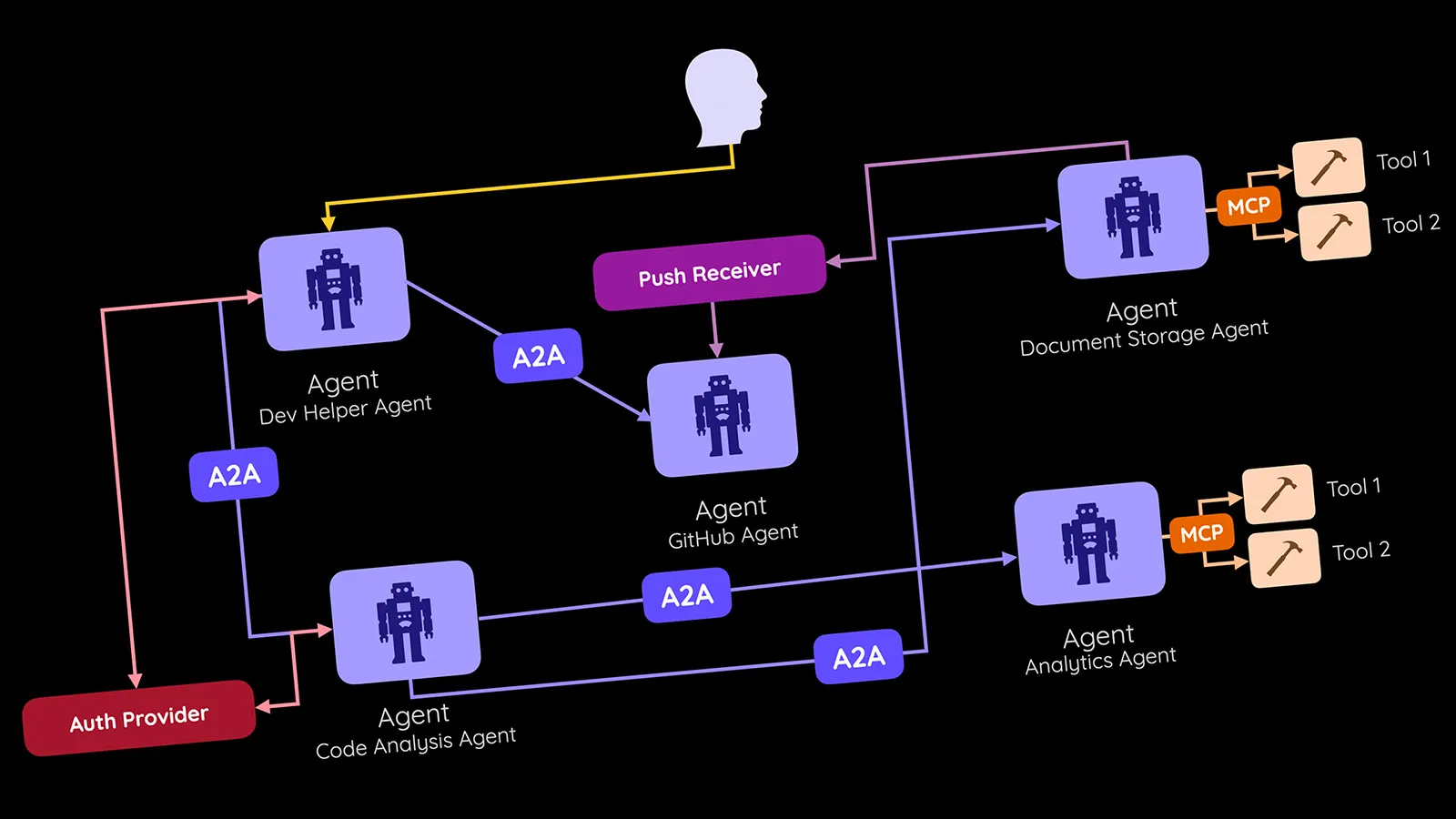

Google's new A2A (Agent-to-Agent) Protocol aims to standardize how different AI agents communicate. But which problems does it solve? How does it relate to MCP (model context protocol)? And is it all just hype?

You might not need WebSockets! Server-Sent Events (SSE) are a simple yet powerful alternative to WebSockets for unidirectional data streaming from server to client. Perfect for real-time updates with automatic reconnection and easy integration.

AI can boost developer productivity - it's not just marketing. But it's also not a magic "vibe coding" wand. Instead, it's all about TAB, TAB, TAB and the occasional chat.

There's a new buzzword in AI-town: MCP - or 'Model Context Protocol'. But what is it? And why should you care despite the annoying hype?

You often hear (and maybe even say) it: Something compiles to "machine code". For example Go. Or Rust. But what does that actually mean? And what's the difference to "byte code"?

The TypeScript team shared that they're porting their compiler and type checker to Go. Not everyone's happy about the free performance gain, though. The question is: Why not Rust?

Vibe coding, a term coined by Andrej Karpathy, is all about letting LLMs generate code for you. I'm not convinced it's the future of coding, though.

AI is here to stay and it WILL likely replace developers. Developers who don't leverage its advantages. The same will happen to developers who rely too much on AI assistants, though.

Chances are high that you're using unnecessary third-party libraries. Stop wasting modern Node.js' potential!

The tsconfig.json file is a file most developers aren't touching. For a good reason! There are many options and settings with non-descriptive names and unclear effect. It doesn't have to be like that, though.