Making Sense of Google's A2A Protocol

You’ve probably heard it – 2025 is supposedly the “Year of the AI Agent”. There’s a lot of hype around the term “AI Agent”.

It therefore makes a lot sense, that Google recently dropped a suite of AI agent-related products and initiatives, including something called the A2A Protocol (Agent-to-Agent Protocol).

So what is the “A2A Protocol”?

It’s actually an interesting technical proposal trying to solve a potentially real future problem.

The core idea is to create a standardized way for different AI agents to communicate and collaborate with each other.

Why Do We Need Agents Talking To Each Other Anyway?

Before diving into A2A, let’s understand why we might even want multiple agents instead of just one giant, all-knowing AI.

Think about software today. We don’t have one single application that does everything? Instead, we have specialized tools: one for email, one for spreadsheets, one for code editing, one for image manipulation.

And there are good reasons for that:

- Complexity: Building one app to do everything well is incredibly hard.

- Specialization: Different tasks require different approaches (and underlying experience & tech).

- Security/Permissions: You don’t want your calculator app to have access to your bank account.

- Vendor Lock-in: Using specialized tools gives you flexibility.

I’d argue the same logic kind of applies to AI agents (if they become a real, usable thing - that’s a different question though).

But when it comes to such AI agents, another reason comes into play: Model Specialization: Different AI models excel at different things. A coding agent might use a model great at code generation, while a report-writing agent uses one optimized for long-form text. Fine-tuning makes this even more pronounced. One agent trying to do everything would likely use a jack-of-all-trades model, master of none.

So, the likely future isn’t one super-agent, but an ecosystem of specialized agents, maybe running on your machine, your company’s servers, or offered by various cloud providers.

The Problem: Wiring Agents Together Manually

Okay, so we have multiple agents.

How do they work together on complex tasks that require multiple skills (e.g., research documents, summarize findings, draft an email)?

Right now, if you build a multi-agent system (using tools like LangChain/LangGraph , Google’s new Agent SDK , or platforms like n8n ), you are manually defining how these agents connect and pass information. You control the whole system.

But what happens when you want your locally-built research agent to collaborate with a specialized financial analysis agent offered by “FinCorp AI”? Or use a “Creative Writer Pro” agent from another company?

Without a standard, you’d need custom integrations for every pair of agents.

How A2A Aims to Solve This: Standardization

The A2A Protocol attempts to solve this by defining a common “language” and set of rules for how agents interact.

It aims to enable:

- Discoverability: How does an agent find out what other agents exist and what they can do?

- Communication: How do agents exchange tasks and results in a structured way?

- Asynchronous Operations: How do they handle tasks that might take minutes, hours, or even days?

A2A Protocol - The Building Blocks

Based on Google’s initial proposal, the A2A protocol includes several key components (by the way, the full tech spec is available online ):

-

Agent Cards:

- This is how an agent describes itself. It’s essentially a metadata file (likely JSON) exposed by the agent’s server.

- It details the agent’s capabilities (what tasks it can perform), required authentication methods (if any), and supported communication mechanisms (like push notifications).

- This allows other agents (or the systems orchestrating them) to understand if and how they can interact with this agent.

-

Authentication:

- If an agent card indicates authentication is needed, it will also specify how to authenticate (e.g., get a session token from a specific provider URL).

- The requesting agent (the “client”) is responsible for obtaining the necessary credentials and including them in its requests to the “server” agent.

-

Task Exchange:

- The core interaction involves sending a task description.

- This isn’t just plain text. It’s structured as a “message” containing metadata (like a session ID) and one or more “parts”.

- Parts can be text (e.g., “Summarize the attached document”) but also other data types like files or images.

- The receiving agent processes the task and sends back a response, also structured as a message with parts (e.g., the summary text, or generated files).

-

Handling Long Tasks (Asynchronous Communication):

- Not all tasks are instant. An agent might need significant time (even days!) to complete a request. Holding an HTTP request open for days is impractical.

- A2A anticipates this. When a long task is submitted, the server agent might immediately respond with “Task scheduled”.

- How does the client agent find out when it’s done? The protocol suggests options (which would be listed in the Agent Card):

- Polling: The client periodically asks the server “Are you done yet?”. Simple, but potentially inefficient.

- Push Notifications: The server sends a notification to a URL provided by the client when the task is complete. The client then fetches the results. More efficient.

- Server-Sent Events (SSE): The server maintains a connection and can push status updates (e.g., “Processing step 1”, “Processing step 2”, “Task complete”) to the client in real-time (I also have a full article about SSEs !).

It’s up to the developers building an agent server to decide which of these capabilities (authentication, async methods) they implement and advertise in their Agent Card.

So what about MCP?

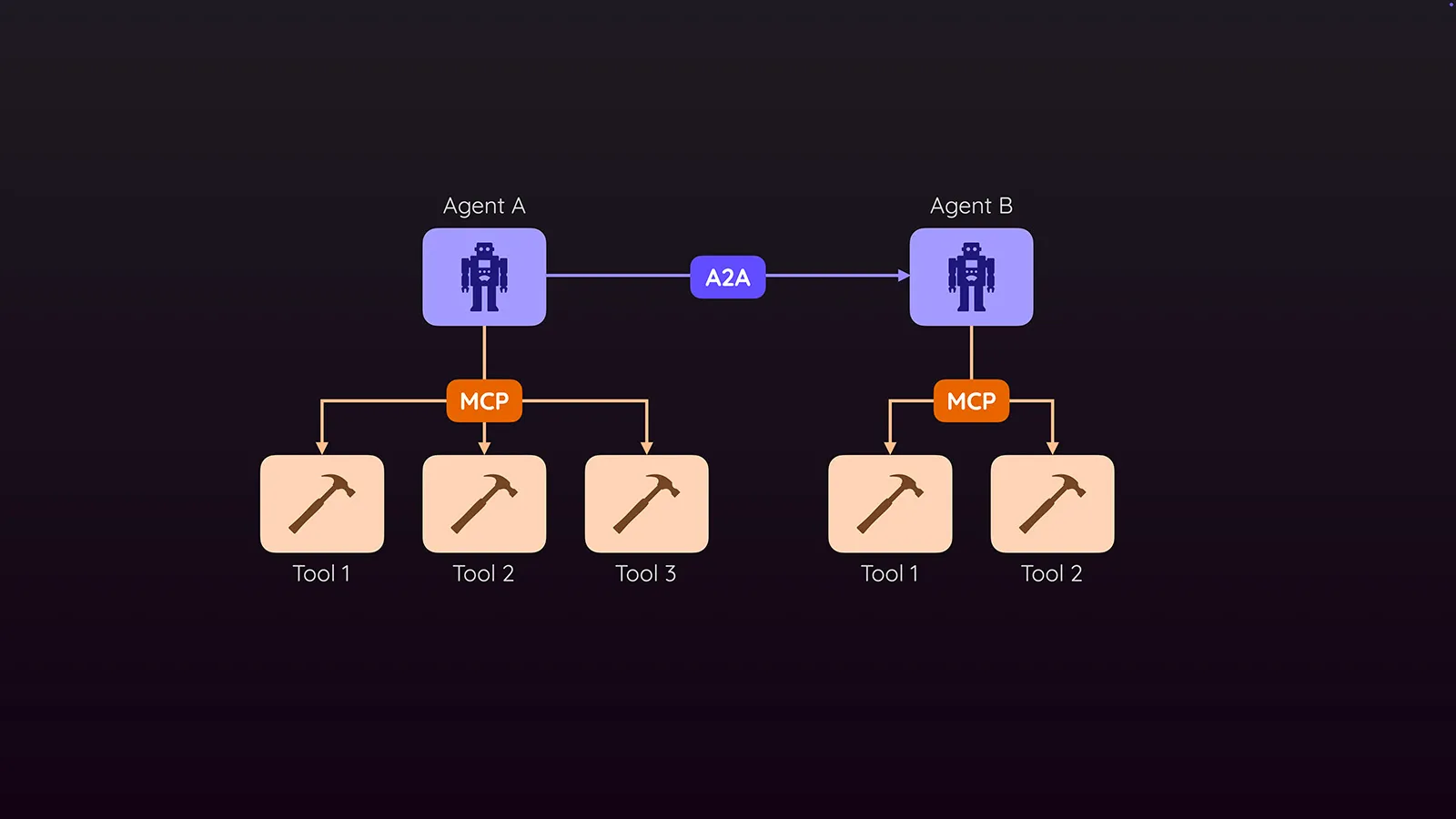

If you’ve heard of Anthropic’s MCP (Model Context Protocol), you might wonder how A2A relates. Google explicitly positions A2A as complementary to MCP (you don’t have to believe that, though).

- MCP: Standardizes how a single LLM-powered application (an agent or chatbot) discovers and uses tools (like function calls, APIs, database interactions). It’s about giving one agent more capabilities.

- A2A: Standardizes how multiple distinct agents communicate and delegate tasks to each other.

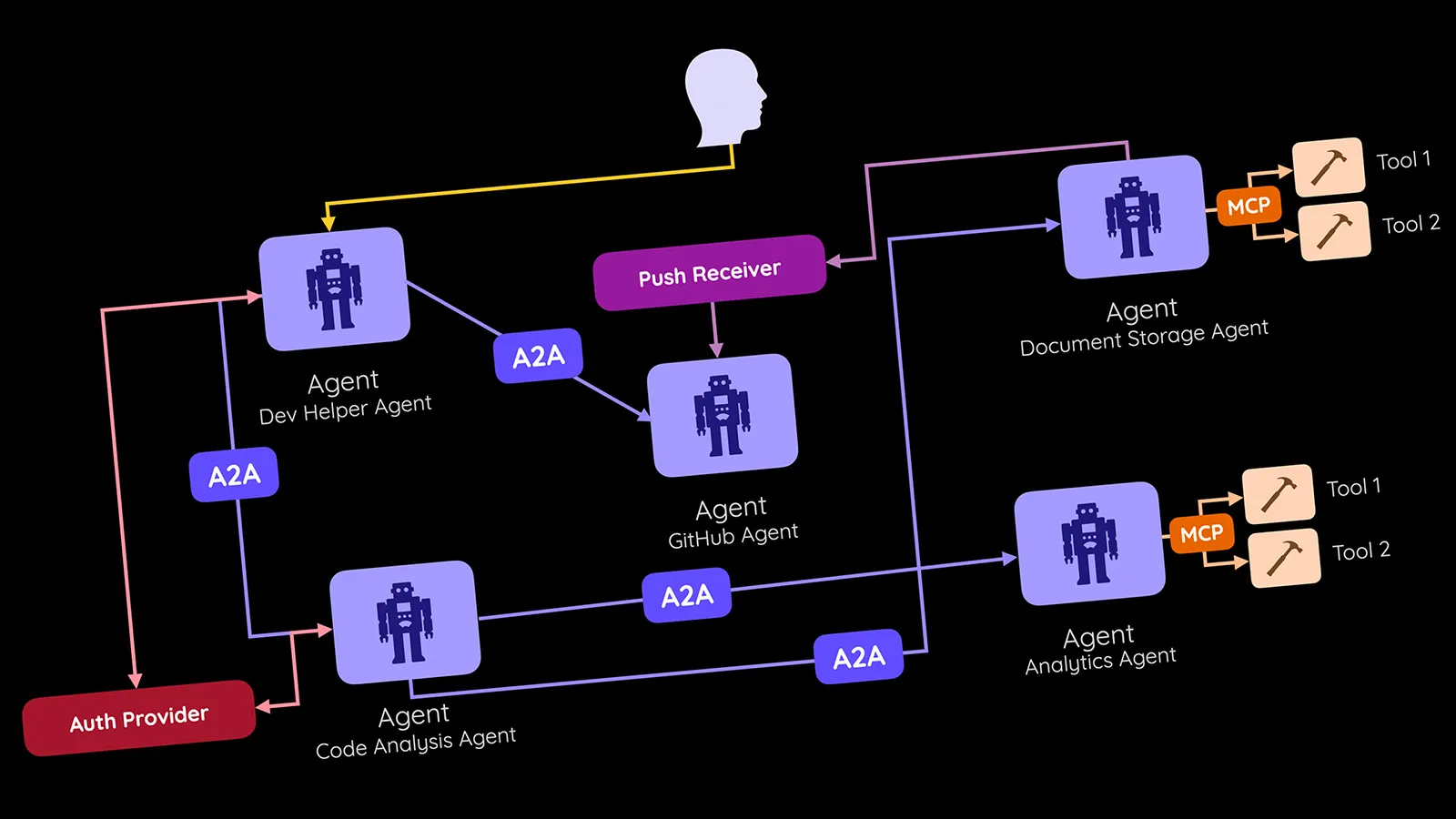

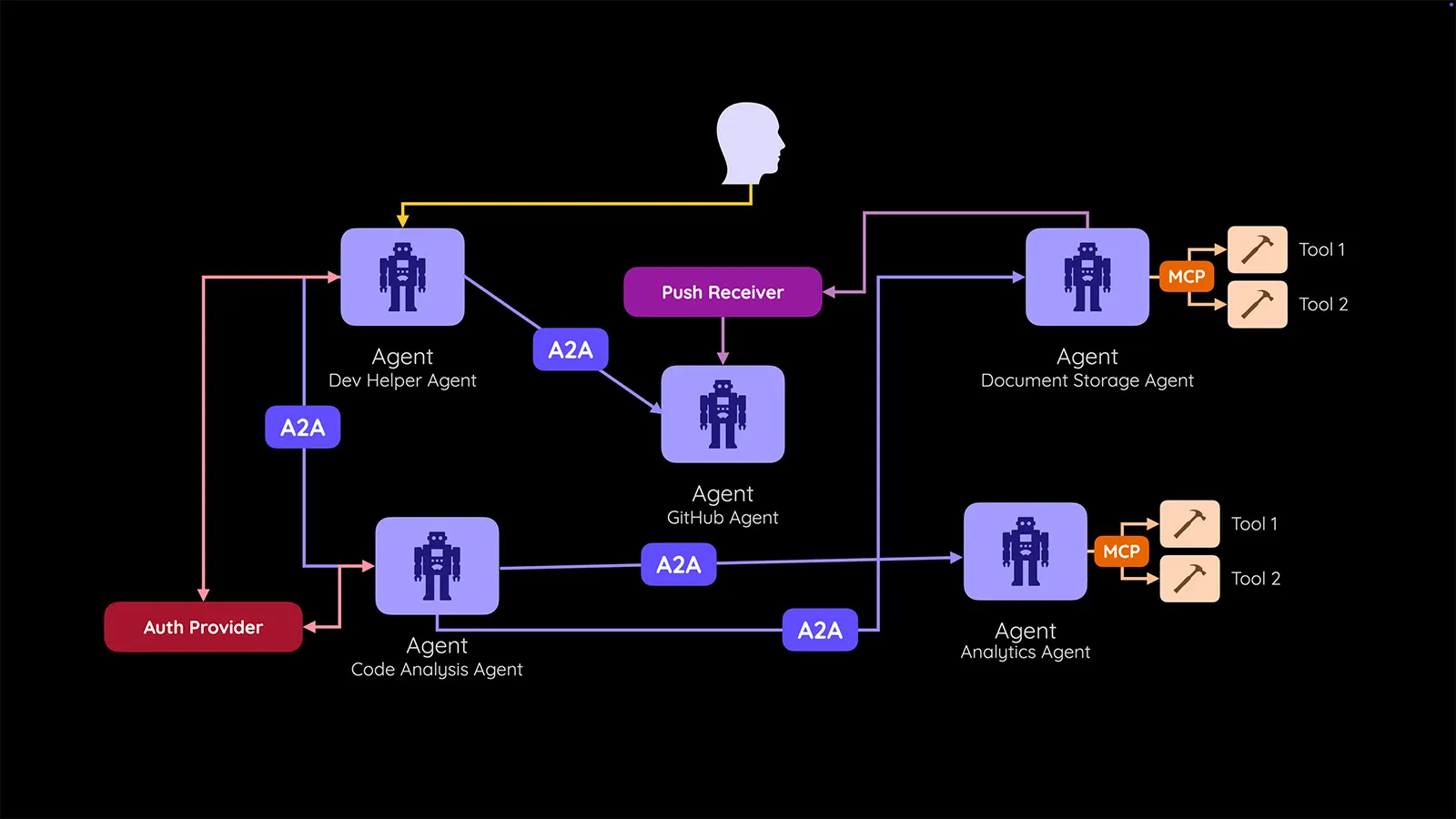

You could imagine a scenario:

- Your “Project Manager” agent receives a complex request.

- It realizes it needs code analysis. It uses A2A to find and task a specialized “Code Analyzer” agent (from a different provider).

- That “Code Analyzer” agent might internally use MCP to access tools like a linter API or a vulnerability database to fulfill the request.

- The “Code Analyzer” agent sends the results back to your “Project Manager” agent via A2A.

Whether we’ll actually end up using both protocols, just one, or neither, remains to be seen.

The Current State & Future Outlook

It’s crucial to remember: A2A is brand new. Google themselves state it’s essentially a draft and subject to change based on discussion and evolution.

- They’ve released example implementations in Python and JavaScript to demonstrate the concepts.

- It’s unclear how widely it will be adopted or if it will become a true standard.

- The entire vision of a widespread, interoperable multi-agent future is still speculative.

You Don’t Need A2A (Today) - But It Could Be Useful

Just like with MCP, you don’t need A2A to build multi-agent systems if you control all the agents. You can wire them up however you like.

But if we move towards a future with many independent, specialized agents from different creators needing to collaborate, a standardized protocol like A2A could be incredibly valuable. It could simplify building complex, distributed AI applications and foster a richer ecosystem.

For now, it’s an interesting proposal to watch. Will it become the TCP/IP or HTTP for AI agents? Only time will tell.